I think I won’t color by myself using Stable Diffusion anymore once the AI coloring service “copainter” is released (it’s a hassle). However, a really great ControlNet model has been released, so I’d like to introduce it.

Expected Environment for This Article

webUI: a1111 or forge

Model: SDXL series (For the generation examples that will appear later, I used Animagine XL 3.1)

A brief explanation for those who have already set up the environment.

ControlNet Model to Use

This is the “really great ControlNet model” mentioned at the beginning.

Katarag_lineartXL-fp16.safetensors

This is Kataragi’s lineart. Currently, this model seems to be the most accurate for SDXL series lineart.

he ControlNet model and where to save it on your own.

How to Color My Own Line Art with Stable Diffusion

We will use ControlNet lineart with i2i.

i2i Settings

Select the i2i tab and input the image in the area that says “Drop your image here – or – click to upload.”

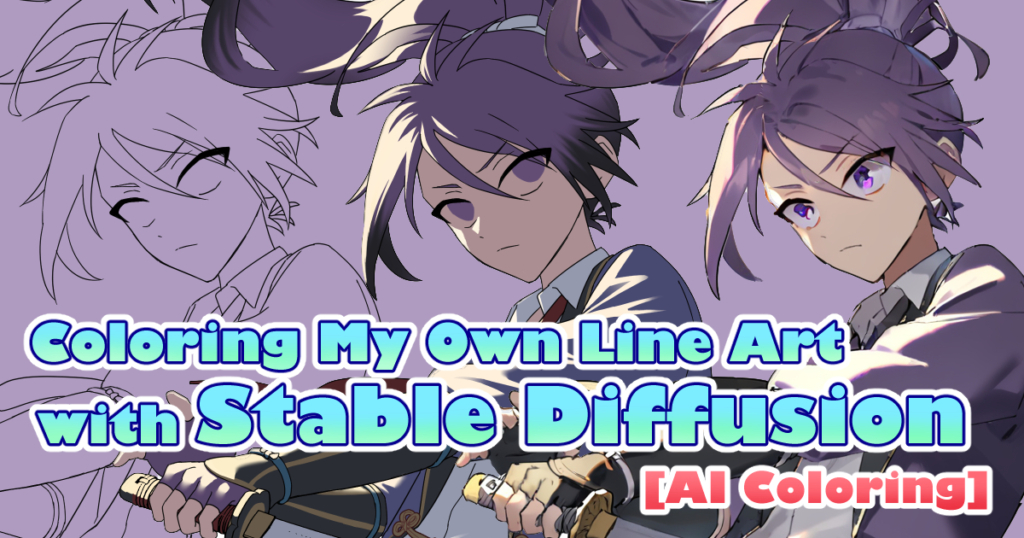

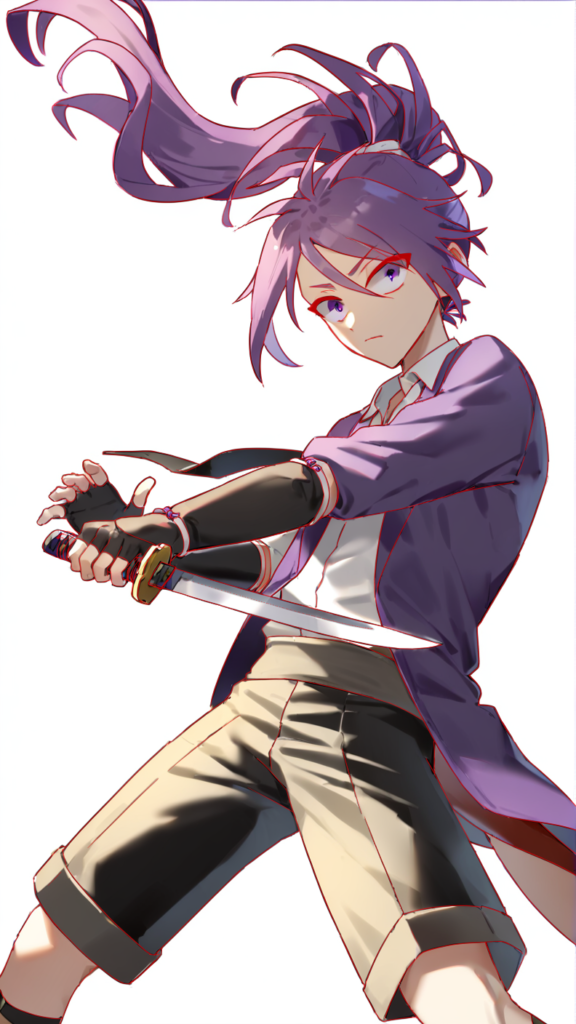

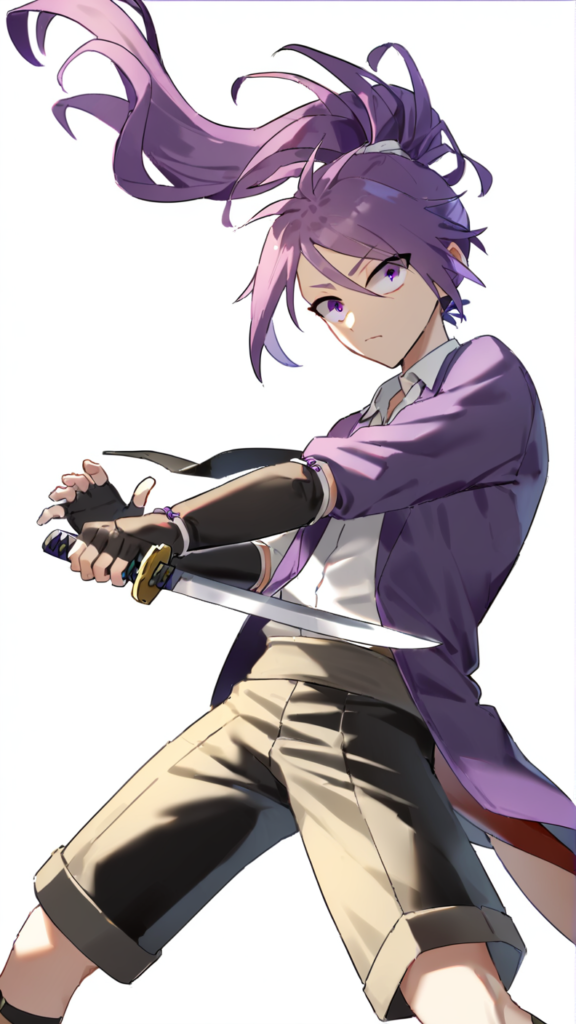

As an example, I entered this image. After specifying the reference image in i2i, input the prompt using “Interrogate CLIP” or “Interrogate DeepBooru.” (Of course, if you’re not bothered, you can input it yourself.)

Remove any unnecessary prompts and add anything you’d like to include.

Click the triangle ruler icon in the generated image size section to get the size of the original image (if you want to generate at the same size as the original image).

Set the Denoising Strength to a value of your choice. I think around 0.5 to 0.75 is good.

ControlNet Settings

Specify the image for lineart.

This time, I entered this line art. You can generate line art from the i2i reference image with the preprocesser without entering anything, but if you have your own line art, it’s better to input it to achieve more fidelity to the line art.

Select “invert (from white bg & black line)” for the preprocesser.

Select the model Katarag_lineartXL-fp16.safetensors.

You don’t need to change any other values. Please try generating it now.

Generation Results

Overlaid Line Art

This is the generated image overlaid with line art. The line art is overlaid in 70% red.

Denoising Strength 0.5

I think with SD1.5 series, even at 0.5, there would have been more shading, but it was drawn a bit too lightly.

Denoising Strength 0.65

Personally, I think this 0.65 setting was the best among those I tried. It captures shadows and light well while improving the overall look, and retains a decent amount of the original colors.

Denoising Strength 0.75

While 0.75 produces the best overall artwork, the colors change quite a bit. Since I’m separating layers by parts when creating the base colors, I might be able to adjust the colors after generation using those layers.

Things I Couldn’t Try

Actually, when coloring with SD1.5 series, I used both lineart and tile together. Using tile helps maintain the colors better, allowing me to increase the Denoising Strength value and achieve a nice effect. However, this time, when I tried to do that, I encountered errors and couldn’t proceed.

Recently, I’ve been encountering frequent errors in my environment, and I can’t figure out what’s wrong. If anyone has successfully used tile together, I would appreciate hearing how the results turned out.

What an amazing lineart!

I feel bad for the developers, but the SDXL series has been struggling with ControlNet’s accuracy. As an artist, I want to match AI to my own art, but that hasn’t been possible. However, suddenly this test version of the model was released, and before I knew it, Katarag_lineartXL was launched. I thought, “Coloring is already a one-horse race for copainter!” but I didn’t expect such an accurate model to be released openly. I really want image generation AI to be used by artists, so I’m very happy to see convenient models for artists being made available. Thank you!

The End

By the way… Here is the link to copainter.

For those who find local environments a bit tricky, I recommend copainter. It offers not only coloring but also inking and other features.

Local environments are great for unlimited use and fine adjustments, which is a big plus.

2024/12/29: Added a link to the article introducing copainter.